Use This Template

Use This Template

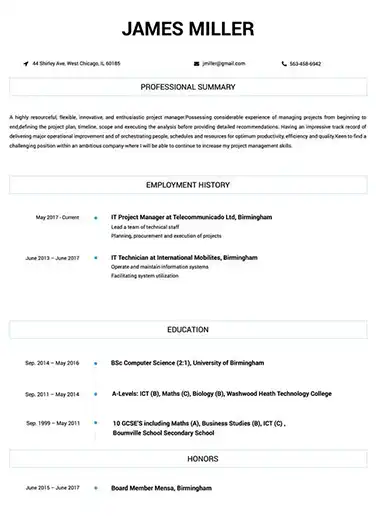

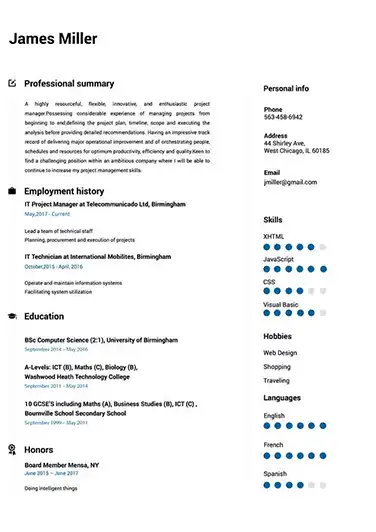

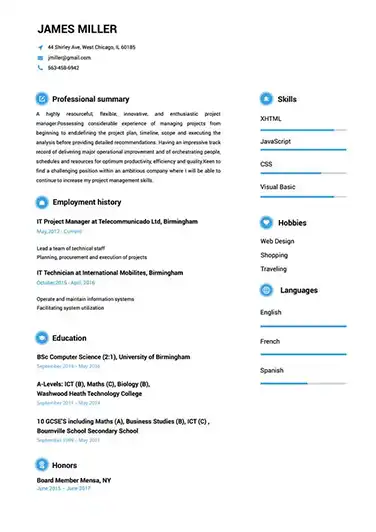

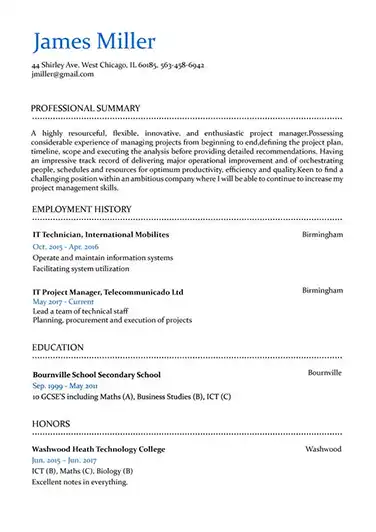

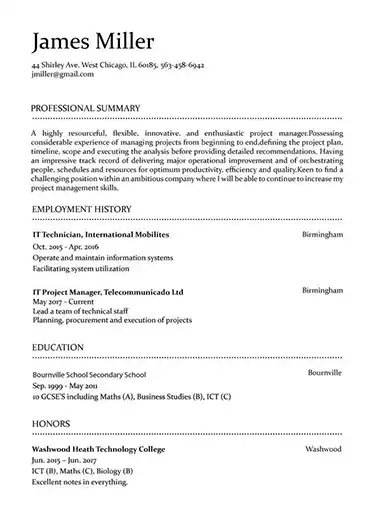

Build your resume in 15 minutes

Create an awesome resume that meets the expectations of potential employers with our selection of professional, field-tested resume templates.

big data engineer: Resume Samples & Writing Guide

garyhawkins45@icloud.com

957-577-5640

Professional Summary

Employment history

- Integrating data from multiple sources

- Creating and maintaining technical documentation

- Writing, testing and maintaining code for data processing

- Implement data governance policies and procedures

- Monitoring and troubleshooting data systems

- Collaborate with stakeholders to define requirements and design solutions

- Building and maintaining data warehouses and data lakes

- Develop data models and data warehouses to facilitate data analysis

- Develop and deploy big data solutions in a distributed computing environment

Education

Skills

Do you already have a resume? Use our PDF converter and edit your resume.

uptonquinn@hotmail.com

733-531-4432

Employment history

- Integrating data from multiple sources

- Analyzing and optimizing data systems for performance

- Writing, testing and maintaining code for data processing

- Collaborating with cross-functional teams to ensure data accuracy

- Perform data analysis to identify trends and patterns in large datasets

- Integrating data from multiple sources

- Implementing data security and privacy protocols

- Working with stakeholders to define and document data requirements

- Monitoring and troubleshooting data systems

Education

Skills

ingram.albert@hotmail.com

724-511-1963

Professional Summary

Employment history

- Staying up-to-date with emerging technologies and trends in big data

- Develop data models and data warehouses to facilitate data analysis

- Creating and maintaining technical documentation

- Developing and maintaining ETL processes

- Develop and maintain ETL pipelines for data ingestion and transformation

- Integrating data from multiple sources

- Develop and maintain data pipelines and data integration processes

- Build and maintain data warehouses and data marts

- Perform data analysis to identify trends and patterns in large datasets

Education

Skills

xaviera.parker@aol.com

738-345-8197

Professional Summary

Employment history

- Developing and maintaining ETL processes

- Creating and maintaining technical documentation

- Collaborate with stakeholders to define requirements and design solutions

- Implementing data pipelines and architectures for data processing

- Design, develop, and implement big data architectures

- Develop and maintain data visualization tools and dashboards

- Writing, testing and maintaining code for data processing

- Working with stakeholders to define and document data requirements

- Collaborate with stakeholders to define requirements and design solutions

Education

Skills

adam.miller@hotmail.com

764-291-5388

Professional Summary

Employment history

- Developing and maintaining ETL processes

- Build and maintain data warehouses and data marts

- Writing, testing and maintaining code for data processing

- Perform data analysis to identify trends and patterns in large datasets

- Develop and implement data security and privacy measures

- Develop data models and data warehouses to facilitate data analysis

- Working with stakeholders to define and document data requirements

- Identifying and resolving data-related issues

- Integrating data from multiple sources

Education

Skills

Not in love with this template? Browse our full library of resume templates

big data engineer Job Descriptions; Explained

If you're applying for an big data engineer position, it's important to tailor your resume to the specific job requirements in order to differentiate yourself from other candidates. Including accurate and relevant information that directly aligns with the job description can greatly increase your chances of securing an interview with potential employers.

When crafting your resume, be sure to use action verbs and a clear, concise format to highlight your relevant skills and experience. Remember, the job description is your first opportunity to make an impression on recruiters, so pay close attention to the details and make sure you're presenting yourself in the best possible light.

big data engineer

- Responsible for data processing(ETL) and device risk analysis for over 4 million terminal in the Ministry of Public Security, which deals with the virus attack, network flow, business system access data on DataWorks of Alibaba Could Platform during the year Nov.2017 to Feb. 2019

- Worked as a big data analyst using Scala on Spark platform analyzing our own company’s data, which includes browser history, software use (developing tools, chatting, gaming) data during the year of May.2017 to Nov.2017.

- Worker as a big data model developer using Java programming language on the Hadoop platform to analyze user’s computer data, which also includes business system access during working hour and after work hour data and their key’s data( used to access business system). Finally, We caught more than 10 people stolen data from the business system during the year of Oct.2016 to May.2017

- Started with building the testing cluster environment of Big data platform in the company, includes Hadoop, Hive, Elsticsearch, and Spark during the first month in the company during the year of Sep.2016 to Oct.2017

big data engineer

- Implement Java (map-Reduce) based process to cleanse the data before the indexing of the data.

- Implemented Hive queries as a part of QA automation.

- Implemented Python based program to enrich the data.

- Setting up new cluster on Amazons Web Services (AWS) and maintaining for any issues.

- Taking regular backup of the Indexed data on Amazon’s S3.

- Transferring data from one cluster to another.

- Writing Hbase queries to retrieve the data for other teams.

big data engineer

- Designed and built data processing pipelines using tools and frameworks in the Hadoop ecosystem

- Designed and built ETL pipelines to automate ingestion data and facilitate data analysis

- Built Streaming services including Window Processing using Flink

- Built Batch services including customized transparent Thrift-Server on data stored on HDFS and Cassandra using Spark

- Designed the Kafka Topic-partition and Cassandra schema regarding the processing criteria

senior big data engineer

- Founding member of Consus R&D team to build data infrastructure event streaming for 800 million users per month

- Led the organization-wide effort to make data lineage, data modeling standardization, and data dictionary.

- Implementation of data ingestion in Apache Cassandra.

- Daily Updates to the Director on the progress of development and tasks assigned.

big data engineer

- As a BigData Engineer, responsible for the development and support of big data projects including requirement analysis and cross-functional team interactions.

- Currently taking care of multiple application build for Barclaycard Business on Cloudera Hadoop ecosystem

- Taking handover of new application coming in production from Dev Team.

- Support live services (Incidents, Problem, change Management).

- Development and deployment of the code of the new project.

- Working with the Admin team for patching and CDH upgrade activities.

- Status reporting of application in BAU to stakeholders.

big data engineer Job Skills

For an big data engineer position, your job skills are a key factor in demonstrating your value to the company and showing recruiters that you're the ight fit for the role. It's important to be specific when highlighting your skills and ensure that they are directly aligned with the job requirements, as this can greatly improve your chances of being hired. By showcasing your relevant skills and experience, you can make a compelling case for why you're the best candidate for the job.

How to include technical skills in your resume:

Technical skills are a set of specialized abilities and knowledge required to perform a particular job

effectively. Some examples of technical skills are data analysis, project management, software proficiency,

and programming languages, to name a few.

Add the technical skills that will get hired in your career

field with our simple-to-use resume builder. Select your desired resume template, once you reach the skills

section of the builder, manually write in the skill or simply click on "Add more skills". This will

automatically generate the best skills for your career field, choose your skill level, and hit "Save &

Next."

- Data Mining

- Data Modeling

- Machine Learning

- Data Warehousing

- ETL

- Hadoop

- SQL

- Programming

- AWS

- Cloud Computing

- NoSQL

- Data Visualization

- Data Analysis

- Linux

- Java

- Scala

- Python

- Bash

- Apache Spark

- MapReduce

- Kubernetes.

- Data Mining

- Data Warehousing

- Hadoop

- Spark

- Machine Learning

- Data Visualization

- ETL

- Python

- Java

- Scala

- SQL

- NoSQL

- Cloud Computing

- Linux

- Bash

- AWS

- Azure

- MapReduce

- Natural Language Processing

- Data Security

- Tableau.

How to include soft skills in your resume:

Soft skills are non-technical skills that relate to how you work and that can be used in any job. Including

soft skills such as time management, creative thinking, teamwork, and conflict resolution demonstrate your

problem-solving abilities and show that you navigate challenges and changes in the workplace

efficiently.

Add competitive soft skills to make your resume stand-out to recruiters! Simply select

your preferred resume template in the skills section, enter the skills manually or use the "Add more skills"

option. Our resume builder will generate the most relevant soft skills for your career path. Choose your

proficiency level for each skill, and then click "Save & Next" to proceed to the next section.

- Communication

- Interpersonal

- Leadership

- Time Management

- Problem Solving

- Decision Making

- Critical Thinking

- Creativity

- Adaptability

- Teamwork

- Organization

- Planning

- Public Speaking

- Negotiation

- Conflict Resolution

- Research

- Analytical

- Attention to Detail

- Self-Motivation

- Stress Management

- Collaboration

- Coaching

- Mentoring

- Listening

- Networking

- Strategic Thinking

- Negotiation

- Emotional Intelligence

- Adaptability

- Flexibility

- Reliability

- Professionalism

- Computer Literacy

- Technical

- Data Analysis

- Project Management

- Customer Service

- Presentation

- Written Communication

- Social Media

- Troubleshooting

- Quality Assurance

- Collaboration

- Supervisory

- Risk Management

- Database Management

- Training

- Innovation

- Documentation

- Accounting

- Financial Management

- Visualization

- Reporting

- Business Acumen

- Process Improvement

- Documentation

- Relationship Management.

How to Improve Your big data engineer Resume

Navigating resume pitfalls can mean the difference between landing an interview or not. Missing job descriptions or unexplained work history gaps can cause recruiters to hesitate. Let's not even talk about the impact of bad grammar, and forgetting your contact info could leave your potential employer hanging. Aim to be comprehensive, concise, and accurate.

Employment history

- Integrating data from multiple sources

- Working with stakeholders to define and document data requirements

- Analyzing and optimizing data systems for performance

- Research and evaluate emerging big data technologies and tools

- Monitoring and troubleshooting data systems

- Develop and maintain ETL pipelines for data ingestion and transformation

- Designing and developing big data solutions

- Develop data models and data warehouses to facilitate data analysis

- Build and maintain data warehouses and data marts

Education

Skills

Provide your Contact Information and Address Year Gaps

Always explain any gaps in your work history to your advantage.

Key Insights- Employers want to know what you've accomplished, so make sure to explain any gaps using a professional summary.

- Adding extra details and context to explain why you have a gap in your work history shows employers you are a good fit for the position.

How to Optimize Your big data engineer Resume

Keep an eye out for these resume traps. Neglecting to detail your job roles or explain gaps in your career can lead to unnecessary doubts. Grammar blunders can reflect negatively on you, and without contact information, how can employers reach you? Be meticulous and complete.

elliscal28@inbox.com

736-755-8746

Employment history

- Bulding and maintainin data warehouses and data lakes

- Developing data modles and data warehouss to faciliate data analysis

- Designing and developing big data solutons

- Implement data governance policies and proceduers

- Develop and maintin ETL pipelines for data ingetion and transformation

- Develop and maintin data visualisation tools and dashboards.

- "I went to the store and bought some food."

- "I went too the store and bough some food."

Education

Skills

Include Job Descriptions and Avoid Bad Grammar

Avoid sending a wrong first impression by proofreading your resume.

Key Insights- Spelling and typos are the most common mistakes recruiters see in resumes and by simply avoiding them you can move ahead on the hiring process.

- Before submitting your resume, double check to avoid typos.

big data engineer Cover Letter Example

A cover letter can be a valuable addition to your job application when applying for an big data engineer position. Cover letters provide a concise summary of your qualifications, skills, and experience, also it also gives you an opportunity to explain why you're the best fit for the job. Crafting a cover letter that showcases your relevant experience and enthusiasm for the Accounts Payable role can significantly improve your chances of securing an interview.

Hawkins garyhawkins45@icloud.com

957-577-5640

386 Pine Valley Avenue, Cottonwood, CA

93307

Microsoft

Redmond, Washington

To the Recruitment Team at Microsoft

As a Big Data Engineer with a proven track record of success in Information Technology (IT), I am excited to apply for the Chief Big Data Engineer position at Microsoft. I believe that my skills and expertise would make a valuable contribution to your team.

Throughout my life, I have been passionate about Database Management and have pursued opportunities to make a difference in this field. My experience in various areas, not just in Information Technology (IT), has given me the opportunity to develop my skills in Public Speaking and Financial Management, which I am excited to apply to the role at Microsoft. I am eager to work with a team that shares my values and to help your organization achieve its well determined goals.

Thank you for considering my application for the Chief Big Data Engineer position. With my skills and the amazing team at this organization, I am assured that I can contribute to your organization's success and make a meaningful impact. Looking forward to a future where we can work together.

Cordially,

Gary Hawkins

957-577-5640

garyhawkins45@icloud.com

Gary Hawkins

Showcase your most significant accomplishments and qualifications with this cover

letter.

Personalize this cover letter in just few minutes with our user-friendly tool!

Related Resumes & Cover Letters

Build your Resume in 15 minutes

Create an awesome resume that meets the expectations of potential employers with our selection of professional, field-tested resume templates.