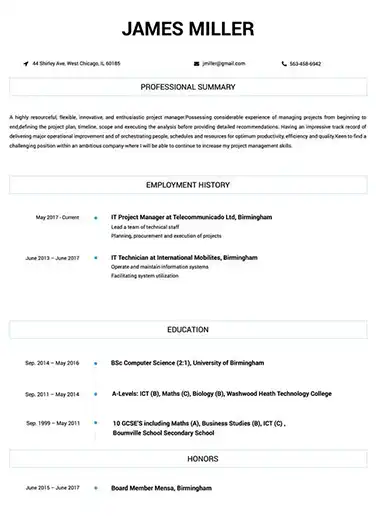

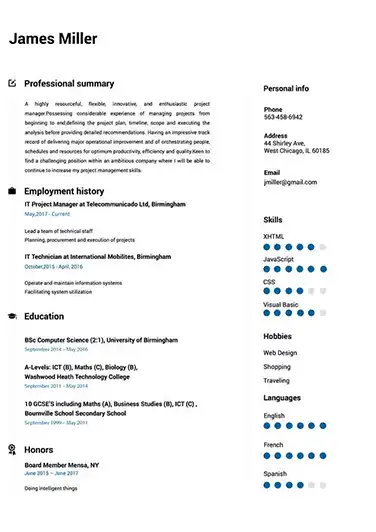

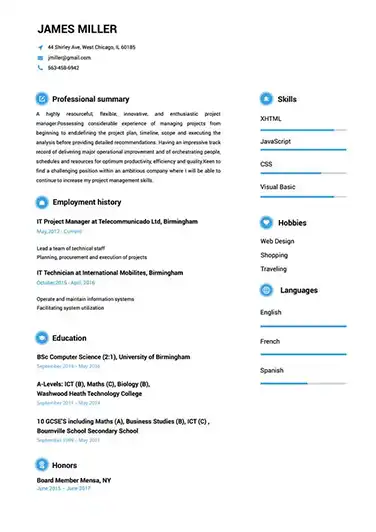

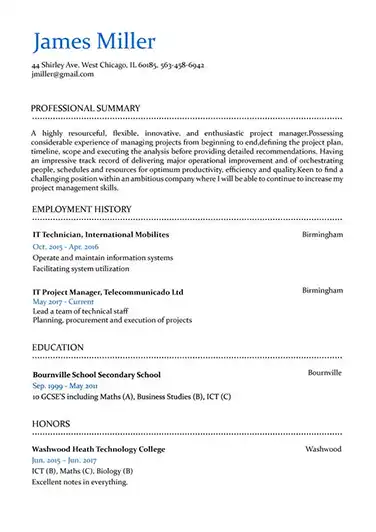

Use This Template

Use This Template

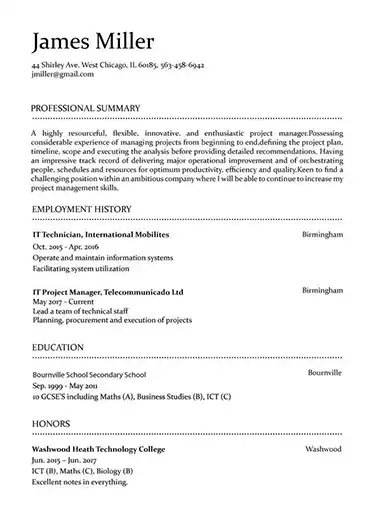

Build your resume in 15 minutes

Create an awesome resume that meets the expectations of potential employers with our selection of professional, field-tested resume templates.

hadoop developer: Resume Samples & Writing Guide

quinn_virginia@yandex.com

952-444-1785

Employment history

- Develop and maintain Hadoop applications and data pipelines

- Develop and maintain MapReduce jobs

- Design and develop software solutions using Hadoop technologies

- Develop and maintain Hive and Pig scripts

- Develop and maintain Spark applications

- Design and implement data warehouse optimization strategies

- Design and implement data warehouse optimization strategies

- Integrate Hadoop with other enterprise systems

- Develop and maintain MapReduce jobs

Education

Skills

Do you already have a resume? Use our PDF converter and edit your resume.

jeffnelson18@yandex.com

744-764-1057

Employment history

- Integrate Hadoop with other enterprise systems

- Develop and maintain MapReduce jobs

- Design and develop ETL processes to ingest and transform data

- Implement security and data protection measures

- Develop and maintain Spark applications

- Design and implement data warehouse optimization strategies

- Design and develop ETL processes to ingest and transform data

- Develop and maintain MapReduce jobs

- Analyze and optimize Hadoop clusters to ensure performance and scalability

Education

Skills

baileyandrew@yandex.com

692-152-0095

Employment history

- Integrate Hadoop with other enterprise systems

- Develop and maintain Hive and Pig scripts

- Design and develop software solutions using Hadoop technologies

- Implement security and data protection measures

- Develop and maintain MapReduce jobs

- Analyze and optimize Hadoop clusters to ensure performance and scalability

- Develop and maintain MapReduce jobs

- Write complex SQL queries to extract data from Hadoop

- Integrate Hadoop with other enterprise systems

Education

Skills

harry.ingram@aol.com

771-342-2297

Employment history

- Develop and maintain Spark applications

- Monitor and tune Hadoop clusters for optimal performance

- Develop and maintain MapReduce jobs

- Design and develop software solutions using Hadoop technologies

- Develop and maintain Hadoop applications and data pipelines

- Design and implement data warehouse optimization strategies

- Develop and maintain MapReduce jobs

- Troubleshoot and debug Hadoop clusters

- Implement security and data protection measures

Education

Skills

longjack@yahoo.com

616-200-8428

Professional Summary

Employment history

- Develop and maintain MapReduce jobs

- Monitor and tune Hadoop clusters for optimal performance

- Develop and maintain Hive and Pig scripts

- Develop and maintain MapReduce jobs

- Develop and maintain Hadoop applications and data pipelines

- Develop and maintain Hadoop-based data processing applications

- Develop data models and data lake architectures

- Design and implement data warehouse optimization strategies

- Develop and maintain Hadoop applications and data pipelines

Education

Skills

Not in love with this template? Browse our full library of resume templates

hadoop developer Job Descriptions; Explained

If you're applying for an hadoop developer position, it's important to tailor your resume to the specific job requirements in order to differentiate yourself from other candidates. Including accurate and relevant information that directly aligns with the job description can greatly increase your chances of securing an interview with potential employers.

When crafting your resume, be sure to use action verbs and a clear, concise format to highlight your relevant skills and experience. Remember, the job description is your first opportunity to make an impression on recruiters, so pay close attention to the details and make sure you're presenting yourself in the best possible light.

hadoop developer

- Work with the application team to design and develop an effective Hadoop solution. Be actively engaged and responsible in the development process

- Work to tight deadlines and provide regular progress updates against agreed milestones

- Loading data onto the cluster, building orchestration jobs using sqoop/flume and writing HIVE queries to support analysis

- The data volume is around 750 Gb and is an apt case for Hadoop like computation. Using loading utilities like Sqoop, data is loaded onto clusters and cleaned.

- Thereafter various business defined algorithm is applied to data loaded in HIVE tables to come up with an indicator variable

hadoop developer

- Project Name: NPM (NH21 component & YUKON component)

- Organization : Harman

- Client : British Telecom

- Role: Hadoop Developer

- Hadoop based project for performance monitoring and reporting of network.NPM solution is designed to monitor, optimize and utilize the network effectively.

- It monitors the performance of various network devices. NPM performs hourly, daily and monthly aggregations on a large number of metrics received for each network sub-element it monitors.

- These insights help the Network Operations Centre (NOC) team to monitor and maintain performance of the network.

hadoop developer

- Worked as a Hadoop developer for reliance Jio Media apps like Jio TV, Jio Music,Jio Video On Demand, Jio News and Jio Magazines. Collecting the data when user uses respective application and storing that live data in data bases and showing the User behavior on Dashboards using elastic search.

- Provide the end to end implementation of data from different internal system to the Hadoop Data Lake using Big data tools like Kafka, Flume and Hive.

- Responsible for Technical Design Preparation/Updating.

- Daily Status Update and followed Scrum processes.

hadoop developer

- Analyzed the Hortonworks Hadoop Architecture of production servers and clusters.

- Worked on analyzing Hadoop cluster and different big data analytic tools including Oozie, Hbase, Hive, NoSQL database and Sqoop.

- Importing and Exporting data from different sources such as MySql, Sql Server, Local to HDFS and Hive and vice-versa.

- Written Hive UDFS to extract data from staging tables.

- Written Linux Shell Scripts to automate Sqoop commands and Oozie workflows to Import multiple tables at onces to Hive.

- Involved in creating Hive tables, loading with data and writing hive queries which will run internally in mapreduce way.

- Written efficient Oozie workflows, sub-workflows and coordinators for data importing and exporting

hadoop developer

- Data Migration from IBM Netezza to Hadoop

- Developed data pipeline from Level 1 to Level 3 development.

- Experience in writing scripts using HQL,

- Developed API’s using apache-spark, SBT, and IntelliJ idea.

hadoop developer Job Skills

For an hadoop developer position, your job skills are a key factor in demonstrating your value to the company and showing recruiters that you're the ight fit for the role. It's important to be specific when highlighting your skills and ensure that they are directly aligned with the job requirements, as this can greatly improve your chances of being hired. By showcasing your relevant skills and experience, you can make a compelling case for why you're the best candidate for the job.

How to include technical skills in your resume:

Technical skills are a set of specialized abilities and knowledge required to perform a particular job

effectively. Some examples of technical skills are data analysis, project management, software proficiency,

and programming languages, to name a few.

Add the technical skills that will get hired in your career

field with our simple-to-use resume builder. Select your desired resume template, once you reach the skills

section of the builder, manually write in the skill or simply click on "Add more skills". This will

automatically generate the best skills for your career field, choose your skill level, and hit "Save &

Next."

- Data Mining

- Big Data

- Hadoop

- Hive

- Pig

- MapReduce

- Spark

- NoSQL

- Java

- Python

- Linux

- Shell Scripting

- Scala

- Apache

- AWS

- Cloud Computing

- ETL

- Kubernetes

- SQL

- MongoDB

How to include soft skills in your resume:

Soft skills are non-technical skills that relate to how you work and that can be used in any job. Including

soft skills such as time management, creative thinking, teamwork, and conflict resolution demonstrate your

problem-solving abilities and show that you navigate challenges and changes in the workplace

efficiently.

Add competitive soft skills to make your resume stand-out to recruiters! Simply select

your preferred resume template in the skills section, enter the skills manually or use the "Add more skills"

option. Our resume builder will generate the most relevant soft skills for your career path. Choose your

proficiency level for each skill, and then click "Save & Next" to proceed to the next section.

- Communication

- Interpersonal

- Leadership

- Time Management

- Problem Solving

- Decision Making

- Critical Thinking

- Creativity

- Adaptability

- Teamwork

- Organization

- Planning

- Public Speaking

- Negotiation

- Conflict Resolution

- Research

- Analytical

- Attention to Detail

- Self-Motivation

- Stress Management

- Collaboration

- Coaching

- Mentoring

- Listening

- Networking

- Strategic Thinking

- Negotiation

- Emotional Intelligence

- Adaptability

- Flexibility

- Reliability

- Professionalism

- Computer Literacy

- Technical

- Data Analysis

- Project Management

- Customer Service

- Presentation

- Written Communication

- Social Media

- Troubleshooting

- Quality Assurance

- Collaboration

- Supervisory

- Risk Management

- Database Management

- Training

- Innovation

- Documentation

- Accounting

- Financial Management

- Visualization

- Reporting

- Business Acumen

- Process Improvement

- Documentation

- Relationship Management.

How to Improve Your hadoop developer Resume

Navigating resume pitfalls can mean the difference between landing an interview or not. Missing job descriptions or unexplained work history gaps can cause recruiters to hesitate. Let's not even talk about the impact of bad grammar, and forgetting your contact info could leave your potential employer hanging. Aim to be comprehensive, concise, and accurate.

Professional Summary

Employment history

- Integrate Hadoop with other enterprise systems

- Analyze and optimize Hadoop clusters to ensure performance and scalability

- Develop and maintain MapReduce jobs

- Design and develop software solutions using Hadoop technologies

- Design and implement data warehouse optimization strategies

- Design and develop ETL processes to ingest and transform data

Education

Skills

Include your Contact Information and Job Descriptions

Missing job descriptions lessens your chances of getting hired.

Key Insights- Employers want to know what you've accomplished, so make sure to include descriptions for all of your previous jobs.

- Keep job descriptions short but don't just list your jobs.

- Never copy-paste a job description to post on your resume. Get inspired and use tools to help you write customized descriptions.

How to Optimize Your hadoop developer Resume

Keep an eye out for these resume traps. Neglecting to detail your job roles or explain gaps in your career can lead to unnecessary doubts. Grammar blunders can reflect negatively on you, and without contact information, how can employers reach you? Be meticulous and complete.

fostervictor57@hotmail.com

894-975-6795

Employment history

- Design and devellop ETL proccesses to ingess and tranform data

- Write complex SQl quiries to extract data from Hadoop

- Analize and optimise Hadoop clusters to ensure performance and scalabilty

- Write complex SQL querieS to extract data from Hadoop

- Analize and optimize Hadoop clusters to ensure performance and scalability

- Implemnet security and data protection measures.

- Develp datam odels an dat lake architectures

- Monitor an tune Hadoop clusters fo optimal performance

- Implemen securit an data protectio measures

Education

Skills

Correct Grammar and Address Gap Years in Your Resume

Don't leave unexplained gaps in your work history.

Key Insights- When explaining gaps in your employment section, start by being honest.

- Elaborate on the gap and show that you never stopped learning.

- Explain and elaborate any gap in your work history by highlighting new skills.

hadoop developer Cover Letter Example

A cover letter can be a valuable addition to your job application when applying for an hadoop developer position. Cover letters provide a concise summary of your qualifications, skills, and experience, also it also gives you an opportunity to explain why you're the best fit for the job. Crafting a cover letter that showcases your relevant experience and enthusiasm for the Accounts Payable role can significantly improve your chances of securing an interview.

Quinn quinn_virginia@yandex.com

952-444-1785

308 Cedarwood Court, Grand View, ID

83624

Apple

Cupertino, California

Esteemed Apple Hiring Team

I am writing to express my interest in the Lead Hadoop Developer role at Apple. As a Hadoop Developer with 8 years of experience in Software Development, I am confident that I have the necessary skills and expertise to succeed in this position.

As someone who has faced challenges in various areas of my life and has overcome them, I am confident in my ability to adapt and thrive in any environment. I have developed a reputation for being a collaborative team player and an effective problem solver, which has been instrumental in my career's success. With my experience and passion for Software Development, I am excited to apply my skills to this role and contribute to your organization's growth and success.

Thank you for considering my application for the Lead Hadoop Developer position. I hope you will allow me to show you what I am capable of bringing to your organization and how we can work together to make an impact on the industry.

With gratitude,

Virginia Quinn

952-444-1785

quinn_virginia@yandex.com

Virginia Quinn

Showcase your most significant accomplishments and qualifications with this cover

letter.

Personalize this cover letter in just few minutes with our user-friendly tool!

Related Resumes & Cover Letters

Build your Resume in 15 minutes

Create an awesome resume that meets the expectations of potential employers with our selection of professional, field-tested resume templates.